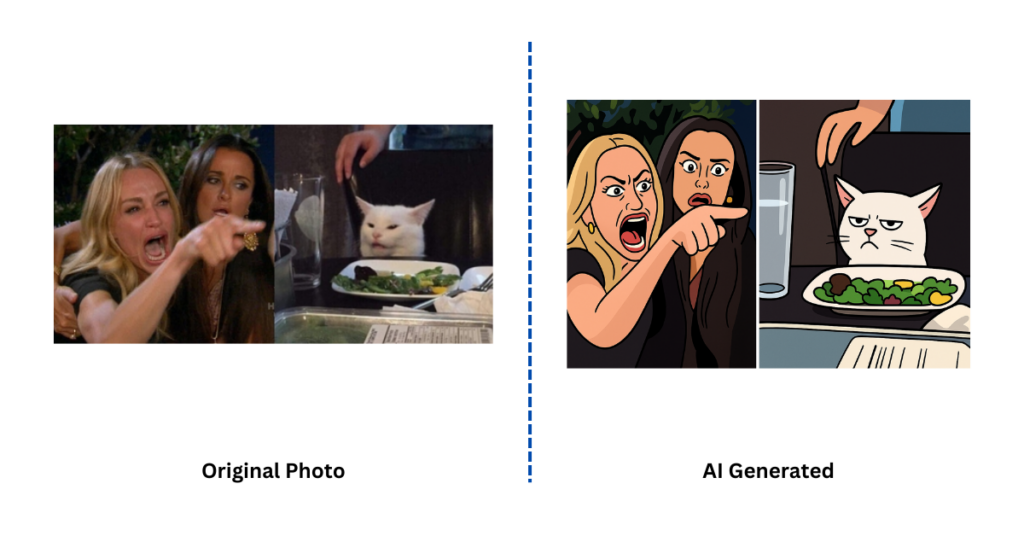

As AI-generated cartoons flood social media, a new question arises: Can a machine steal an art style without even giving any credit?

In the last few days, social media feeds have been completely awash in pastel skies, dreamy forests, and wide-eyed characters that look straight out of a Studio Ghibli film. The resemblance isn’t subtle. These AI-generated images are clearly mimicking Miyazaki’s signature style—from the soft lighting and hand-drawn feel to that ethereal atmosphere that makes his films so magical.

While fans are absolutely mesmerized by these images, many creators and ethicists are raising more than just an eyebrow.

Because behind all this beauty lies a deeper concern: What happens when an AI learns too well? When it doesn’t just take inspiration from art, but essentially absorbs and reproduces it without context, consent, or giving any credit?

When Tribute Becomes Theft

Let’s be clear: artists have always learned from other artists. That’s how art evolves. But what we’re seeing now isn’t study or homage—it’s full-scale aesthetic replication happening at massive scale with zero oversight.

These AI models were likely trained on countless artworks—some possibly scraped from the internet without permission—absorbing stylistic DNA in the process. When the outputs start looking indistinguishably like Miyazaki’s brushstrokes, we’re no longer talking about influence. We’re talking about imitation.

And in the eyes of many artists and rights holders, that’s a serious problem.

Can We Teach AI to Forget?

At Indigma, we believe that AI should not be restricted, but it should be regulated. Artists who feel their rights are threatened should have the tools to protect themselves.

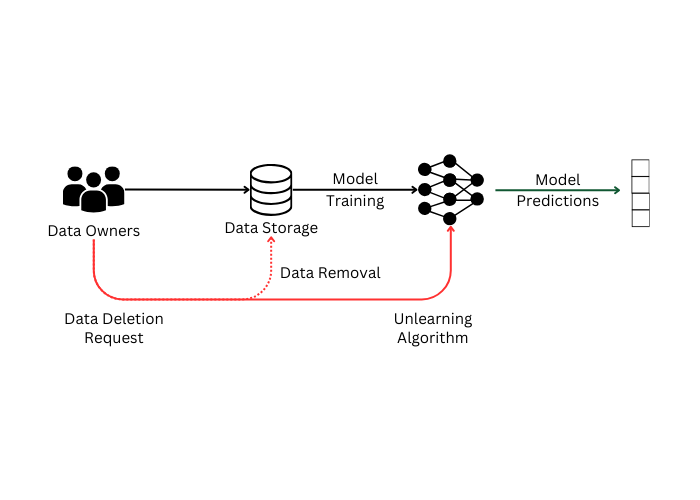

Machine Unlearning is a groundbreaking technique that allows for selectively removing certain data or stylistic influences from an AI model. Think of it like erasing a specific memory from the machine’s brain—a way to undo unwanted training and restore ethical boundaries.

This isn’t just a technical fix. It’s a statement of values.

In creative domains, unlearning helps protect artistic identity. It gives rights holders a mechanism to say, “That’s mine—and I don’t want it used.” And it offers developers a chance to be proactive rather than reactive—respecting creators before disputes arise.

The Ghibli-Style Trend: A Case in Point

The explosion of AI-generated Miyazaki-style art is a perfect example of what happens when technology outpaces ethics. These images are being shared and celebrated by millions—but very few are asking where the line should be drawn.

When a model produces art that feels indistinguishable from a specific creator’s work, we risk undermining not just copyright laws, but the very notion of human creativity itself.

This is why Machine Unlearning matters. Because consent, originality, and artistic ownership are not optional in the age of AI—they’re essential.

At Indigma: Building Responsible AI Solutions

At Indigma, we are not just chasing innovation—we’re trying to shape it responsibly. We specialize in ethical, sustainable AI systems that prioritize fairness, transparency, and human values.

Our work in Machine Unlearning is part of a broader commitment to AI that respects the people and cultures it interacts with. Whether it’s removing unauthorized training data, safeguarding intellectual property, or supporting creators, we believe in an AI that learns—and unlearns—with purpose.

Below you can read more about Machine Unlearning and our work:

- Perifanis, V., Karypidis, E., Komodakis, N., & Efraimidis, P. (2024, June). SFTC: Machine Unlearning via Selective Fine-tuning and Targeted Confusion. In Proceedings of the 2024 European Interdisciplinary Cybersecurity Conference (pp. 29-36).

- Kurmanji, M., Triantafillou, P., Hayes, J., & Triantafillou, E. (2023). Towards unbounded machine unlearning. Advances in neural information processing systems, 36, 1957-1987.

- Liu, S., Yao, Y., Jia, J., Casper, S., Baracaldo, N., Hase, P., … & Liu, Y. (2025). Rethinking machine unlearning for large language models. Nature Machine Intelligence, 1-14.

TL;DR

- AI-generated Ghibli-style images are going viral, but raise serious ethical and legal questions.

- Machine Unlearning offers a way to erase specific influences from AI models—protecting artists and their work.

- This is a crucial step toward ethical AI development that respects creative integrity and human contribution.

- At Indigma, we’re pioneering responsible AI solutions—and helping businesses stay ahead of the curve.

Need Help Building Ethical AI?

If you’re developing AI products and want to make sure they’re fair, transparent, and respectful of creators—let’s talk.

At Indigma, we help businesses embed Responsible AI into everything they build—from data governance to Machine Unlearning and beyond.

Reach out for tailored solutions in ethical AI, sustainability, and long-term trust.

Let’s build AI that doesn’t just impress—but also respects.